How computers have (mostly) conquered chess, poker, and more

Leon Neal // Getty Images

How computers have (mostly) conquered chess, poker, and more

Computers have raced toward the future for decades, starting as manual punchcards and now turning the tides on how all of humanity operates.

Artificial intelligence is just one field in computing, referring not just to the mechanical guts of our machines but how we can teach machines to reason, strategize, and even delay their actions to seem and behave more, well, human. As experts have made more and more powerful AIs, they’ve sought ways to demonstrate how good those AIs are, which can be challenging to do in a relatable, quantifiable way.

Enter the classic game format. Games are tailor-made for AI demonstrations for multiple reasons, as many games are “solvable” (meaning AI can truly master them, mathematically speaking) and their contexts (fast, multifaceted, strategic) can allow programmers to show off truly multidimensional reasoning approaches.

To illustrate this, PokerListings assembled a list of breakthrough gaming wins for AI, from traditional board games to imperfect information games to video games. The games listed here have little in common sometimes apart from the fact that AI can now beat human players at them all. They range from classic analog games like chess and Go to Texas Hold ‘Em poker and today’s most popular multiplayer esports video games.

Keep reading to learn more about the eight significant instances when AIs beat human players at their own game.

![]()

TOM MIHALEK/AFP // Getty Images

Chess

– Year AI had a benchmark win: 1997

Chess has always led the way in technology, from games conducted by letter or telegram to early internet servers used to host primitive chess clients.

The game of chess is deceptively simple: Each player has 16 pieces including eight identical pawns and eight variety figures that all move in different patterns, which they must use to capture the opponent’s king. The mathematics of chess is technically finite—unless both players intentionally prolong the game forever as a thought experiment. That means computers have always been in the game, so to speak, learning chess moves and processing long lists of possibilities at faster and faster speeds.

In 1997, Russian chess grandmaster Garry Kasparov lost to IBM’s supercomputer Deep Blue, which could simply smash through thousands or millions of possible moves in a much faster time than the human brain can do.

Ben Hider // Getty Images

Jeopardy!

– Year AI had a benchmark win: 2011

‘Jeopardy!’ is a long-running American quiz show where contestants deliver their answers in the form of a question. In 2011, the show was the site of an astonishing victory when IBM’s artificial intelligence, Watson, won handily over two human contestants. And the two humans were nobody to sneeze at, either, as both were iconic former winners: Ken Jennings, who held the longest streak ever; and Brad Rutter, who held the biggest amount of total prize money ever.

Watson was the collective name for a set of 10 racks of 10 powerful processors each. Watson also had to be trained not just for knowledge but for the style and structure of ‘Jeopardy!’ questions, making the victory all the more impressive.

Andreas Rentz // Getty Images

Atari

– Year AI had a benchmark win: 2013

For people over a certain age, Atari knowledge is practically guaranteed. The iconic video game and console manufacturer captured the public imagination, and its influence on pop culture was so great that even nongamers had to take notice. The Atari 2600 console was released in 1977 and represented a major step forward in home gameplay, including both a joystick and a controller. That means any computer attempting to do well at Atari games must both comprehend the levels in games and also turn that information into action cues involving direction as well as buttons.

In 2013, researchers published the results of a study where a computer even outperformed a human expert at the games “Breakout,” “Enduro,” and “Pong.”

Barry Chin/The Boston Globe // Getty Images

Poker

– Year AI had a benchmark win: 2015

Poker is the collective name for a bunch of card games of different styles that are typically played in contexts where players bet tokens or money. One of the major challenges with poker is that the computer—or any player—has imperfect information, meaning entire events transpire that just one player knows about and doesn’t share. Unlike perfect information games like Connect Four and checkers, poker doesn’t make all the pieces that are in play visible at once.

In computing terms, imperfect information translates to an amorphous black box with mystery contents. But in 2015, a computer algorithm called CFR+ broke the black box. Texas Hold ‘Em is among one of the most popular poker games, and a variation called heads-up limit has only two players, which makes “solving” the game with a computer simpler than when there are more players in the mix. The CFR+ algorithm “solved” heads-up limit poker, meaning the computer will likely be able to beat almost any human player.

Google // Getty Images

Go

– Year AI had a benchmark win: 2016

“Go” is one of the oldest exigent games played by humans and involves a much more complex playspace even than chess. It’s played on a 19-by-19 board with up to hundreds of pieces, making its strategies and number of possible moves exponentially greater than those in chess. For this reason, people have long believed that computers aren’t really capable of figuring it out.

In 2016, however, Google’s AI, DeepMind, finally bested the best human players at “Go.” To achieve this, the system first employed deep learning, a technology where computers study human behavior in great depth in order to build a library of available strategies. Then they had two copies of the computer play each other in order to build an even more superior second set of moves based on what the “best” human-conceived moves could offer.

AAron Ontiveroz/The Denver Post // Getty Images

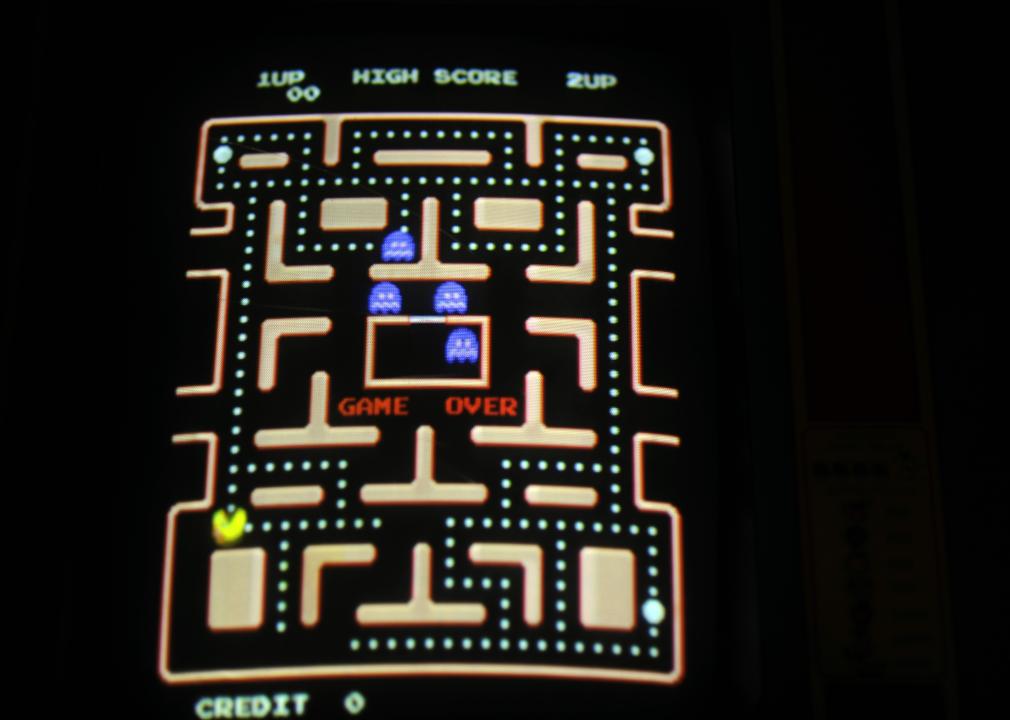

Ms. Pac-Man

– Year AI had a benchmark win: 2017

“Pac-Man” is a classic arcade game in which players control a hungry yellow circle to chow down on mysterious white dots. All the while, you’re pursued by colorful pixelated ghosts determined to catch and eat you. “Ms. Pac-Man” is a notoriously tough sequel that proponents argue is even better than the original. To conquer it, an AI group called Maluuba that was acquired by Google’s DeepMind designed a system that experts fittingly named “divide and conquer.” They divided all the actions in the game into “chunks,” like escaping ghosts or seeking out a particular white dot. Then they assigned a “manager” role to decide, in the moment, what the best strategic move is.

Leon Neal // Getty Images

StarCraft II

– Year AI had a benchmark win: 2019

“StarCraft II” is a 2010 multiplayer, real-time strategy game that has been free-to-play since 2017. In RTS games, multiple players can simultaneously take actions, meaning there’s a huge competitive emphasis on making as many actions per minute as possible. The game has a huge esports scene, with professionals who can somehow reach or top 300 APM.

Google’s DeepMind set its sights on “StarCraft II” as a worthy challenge after their efforts with “Go,” “Ms. Pac-Man,” and others. In 2019, their artificial intelligence was able to reach the top 0.15% of 90,000 players in the game’s European servers at the time.

Suzi Pratt/FilmMagic // Getty Images

Dota 2

– Year AI had a benchmark win: 2019

“Dota 2” is a multiplayer online battle arena game, meaning groups of teams (usually two and some larger multiples of two) face each other in premade battle maps and fight to see who wins.

First released in 2013, “Dota 2” features more than 100 player characters to choose from with different strengths and weaknesses. The game is massively popular and has a huge esports scene. Part of the goal with trying to beat humans at a game like “Dota 2” is that these games make great, relatable examples of how AIs are able to think on their feet, so to speak, in complicated, ever-changing environments. OpenAI’s team, OpenAI Five—so named because two teams of five players compete in “Dota 2”—was able to defeat the world’s top-ranked “Dota 2” esports team in 2019.

This story originally appeared on PokerListings

and was produced and distributed in partnership with Stacker Studio.